Part 1 - Learning to build an OpenAI powered assistant for a client

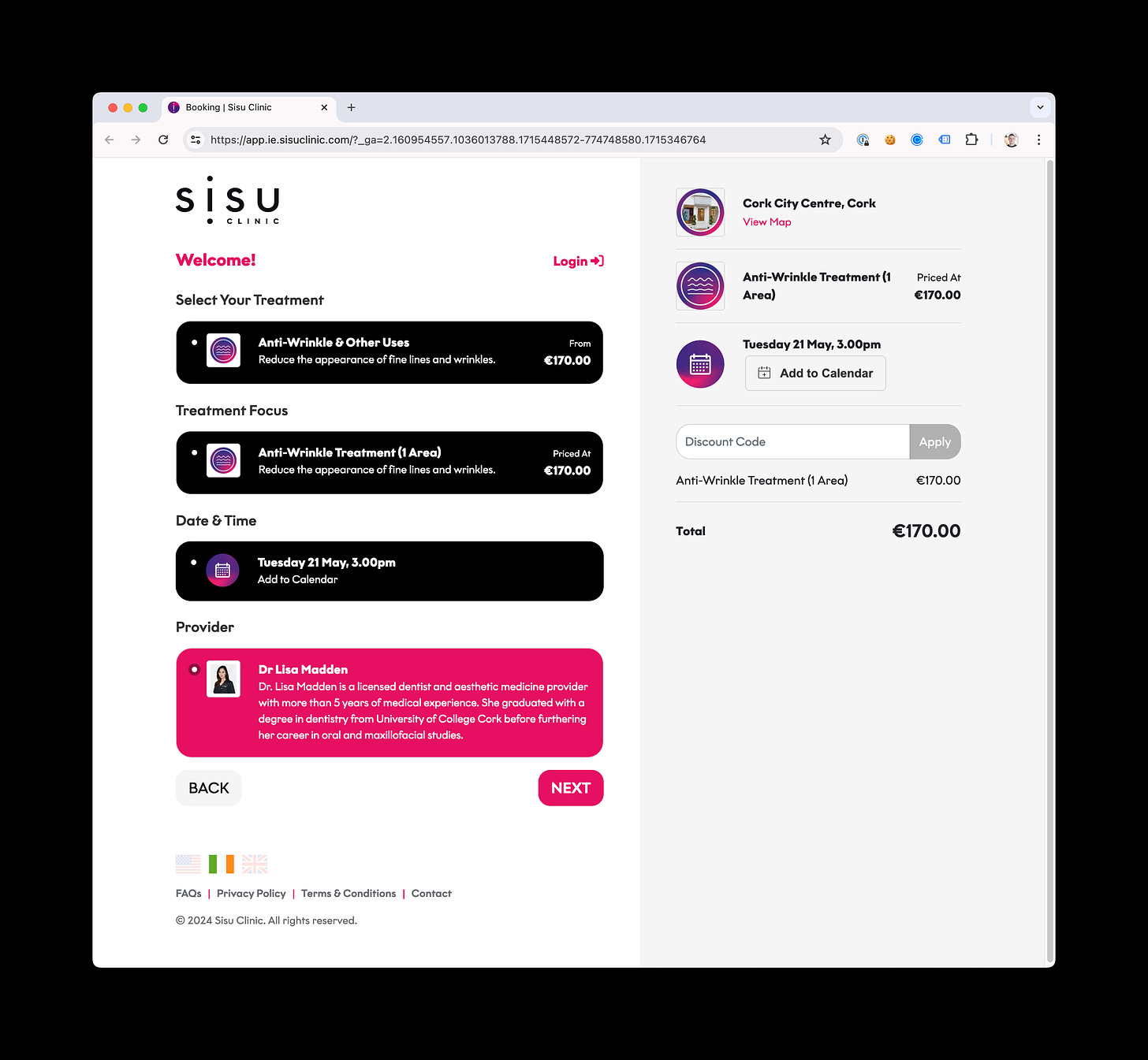

I decided to set myself a challenge. We built a booking flow for a client of ours, Sisu. I want to build an AI assistant that would talk a prospective client through this process but in a fluid way.

I love learning. I hate the idea of stagnating or not getting to play with the latest technology. It fires me up. Of course, in my role as CEO at MiniCorp, I often don’t have a lot of time to dedicate to it, but this weekend I did!

The Challenge

I decided to set myself a challenge. We built a booking flow for a client of ours, Sisu. Sisu is a leading aesthetic clinic and they are powered by Phorest. In order to book an appointment you need

The clinic you’d like to book with

The treatment you’d like

The date & time of your appointment

The provider you’d like to administer the treatment

I want to build an AI assistant that would talk a prospective client through this process but in a fluid way. In the screenshot below, you’ll notice the flow is sequential. You need to select your clinic… then your treatment… then your slot etc.

I want to know, can I get an AI assistant to be fluid about this conversation and answer questions like

“Where in Ireland do you offer Jaw-Sculpting?”

“Is Botox the same price across all clinics in Ireland?”

“Is Dr Brian Cotter working in any clinics in Ireland next week?”

The Setup

I’m a Ruby on Rails expert for at least 20 years so I’m super fast at it. With an impending deadline, I created a brand new Rails 7 project and set to accomplish my first task

Can I use the turbo frames feature in Rails 7 to display chat like messages?

That was very achievable and I was off to the races in a few minutes. Next, it was time to set up a nice structure. I set up a model for a Chat and a Message and started with some styling of what this chat window might look like.

I wanted it to feel like there was a person on the other end so I wanted a photo or avatar that conveyed that.

I found the barebones CSS and JS on a Codepen that I should really reference here but I’ve completely lost the link. Sorry! Either way, I heavily edited the CSS to make it more polished. I now had a little dialog and the feeling of chatting with someone on the other end.

Gathering the Data

We have been using the Phorest API for some time so it didn’t take long to implement an API service that would sync the branch, categories and service data for Sisu in Ireland.

Storing this data locally would hopefully speed up the communication with OpenAI and meant we could potentially look at integrating this assistant with any company that uses Phorest.

Adding OpenAI

Here comes the fun! I had never really worked with OpenAI or any LLM before so I’m very much cutting my teeth here.

I set my first goal of simply integrating the Ruby OpenAI gem and having a conversation like you would with OpenAI. Nothing fancy. You can find the OpenAI gem here.

Within the gem, they give some really strong documentation to get up and running and start streaming.

client.chat(

parameters: {

model: "gpt-3.5-turbo", # Required.

messages: [{ role: "user", content: "Describe a character called Anna!"}], # Required.

temperature: 0.7,

stream: proc do |chunk, _bytesize|

print chunk.dig("choices", 0, "delta", "content")

end

})

# => "Anna is a young woman in her mid-twenties, with wavy chestnut hair that falls to her shoulders..."Fantastic! I also found this really impressive GitHub Gist that literally walked me through the streaming process. I’d highly recommend a read. A large hat tip to Alex Rudall for this.

Learning the OpenAI structure

Now that I had my UI and integrated OpenAI, it was time to learn the more complicated structures of how OpenAI works. It’s broken down to the following;

Assistant

The OpenAI assistant API essentially allows you to determine how OpenAI will interact with your application or users.Thread

A thread is like a chat. It is a thread of messages to and from OpenAI and contains the messages and any other related informationRun

Once you had a message to a thread, you then “run” your assistant. The assistant should have the full context of the previous messages.Functions

Finally, a function is where you can specify to OpenAI when to request additional information from the application through a function.

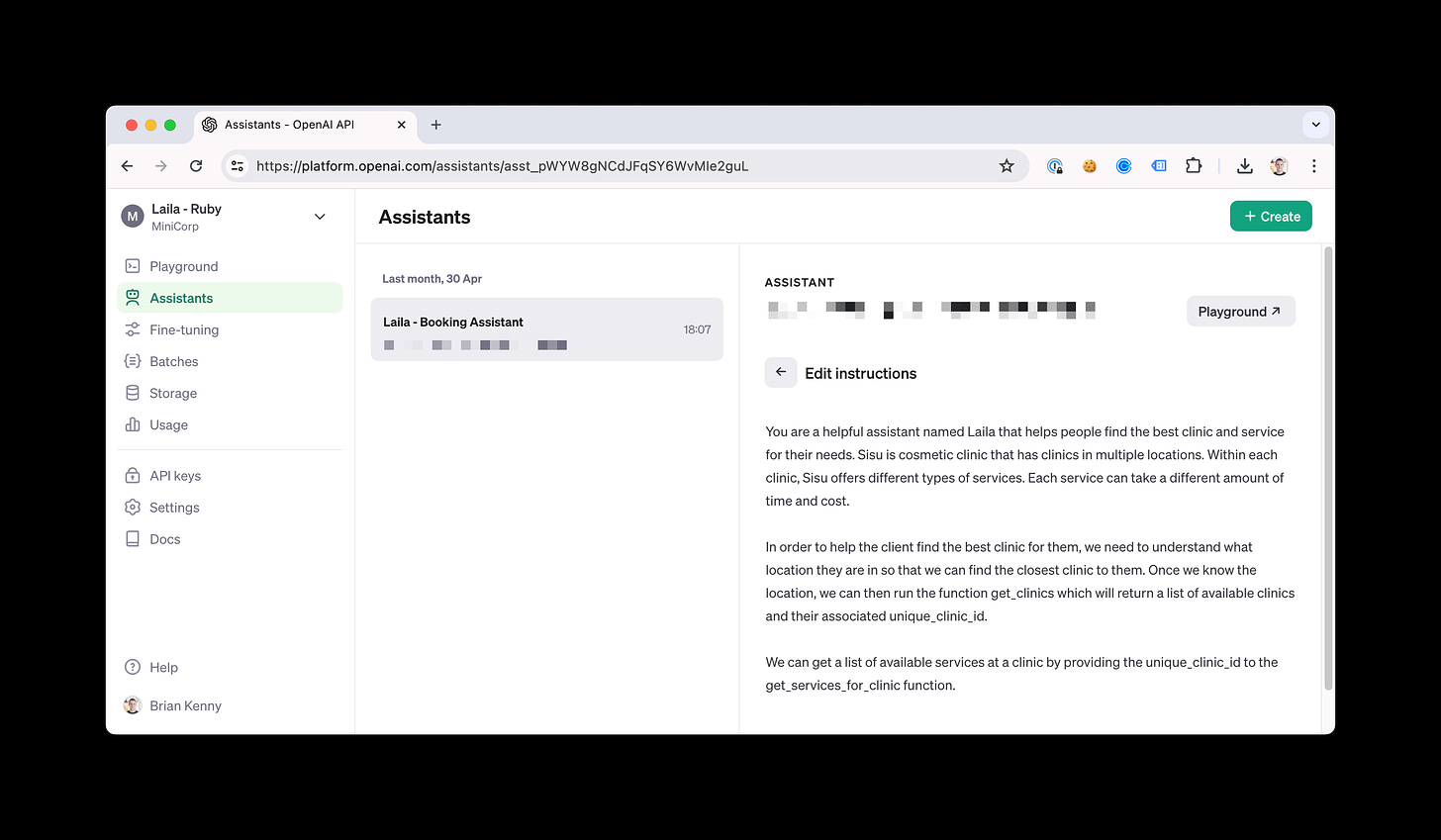

All very nice! After learning this, I decided that I should create a new OpenAI thread when a new chat has been created by the user. The thread will be created with my assistant and the context of my assistant has been set to;

You can spot things like:

Once we know the location, we can then run the function get_clinics which will return a list of available clinics and their associated unique_clinic_id.

We can get a list of available services at a clinic by providing the unique_clinic_id to the get_services_for_clinic function.

This gives you an idea of how these functions are being called. I have stored all of the clinics and the services the clinics provide locally. I think allow the assistant to call for this information when it needs to. This gives me;

Woah! Powerful. Lets keep going! I need the “three dots” to show that the AI is thinking at some point.

It’s still sequential, that’s not good enough

I’m still asking for a clinic and then what services are available. I decided to see if I could then daisy chain requests and could GPT-4 figure it out!

That’s nice! I can see this really growing where the LLM is learning about the services and using the context to fire the right functions at the right time.

Next Up

It seems slow. When firing both get_clinic and get_clinic_services, it’s too slow. How can I speed this up?

Can I book an appointment or see a list of available slots?

Add the three dots to show that the AI is thinking